User:Monsieurp/Gentoo Linux on ZFS

Introduction

This is yet another guide to install Gentoo Linux on a ZFS-formatted disk. This guide has been greatly inspired by Jonathan Vasquez' Gentoo Linux ZFS guide and Funtoo's ZFS Install guide.

Why yet another guide?

- I find Jonathan's disk layout overly complicated (no offence if you read that mate!).

- The Funtoo ZFS install guide is actually pretty well written but unfortunately has been written with Funtoo in mind.

- I took some notes during the installation and decided to put them online for others to read and learn from.

Throughout this guide, I will often link to the official Oracle ZFS documentation, a well written piece of document that gets to the point. Although it has been written with Solaris in mind, you can adapt most commands for Linux.

Required Tools

Download the ISO file and burn it to a CD or USB key. At the time of this writing, the latest ISO file available is: sysresccd-5.0.2_zfs_0.7.0.

There are many ways to go about burning an ISO file to a USB key, dd and unetbootin to name a few. This part won't be covered in this guide. You should look it up in your favourite search engine :-)

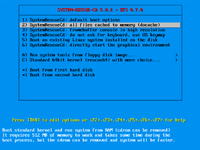

Booting the SR CD

Once you've burnt the ISO, power on the computer and boot off the CD player or USB key. The System Rescue CD boots and you should land in the multi-selection entry screen on the right hand side.

Here, pick either the 1st or 2nd entry, which are default boot options or all files cached to memory. On my server, I had to pick the 2nd one. Your mileage may vary.

After hitting enter, the system will then boot into a Gentoo Linux LiveCD and automatically log you into a zsh shell as root.

Let's get cracking!

Configuring the disks

Storage pool

We are creating a basic ZFS storage pool named rpool that contains only one disk. In this configuration, ZFS takes up the whole disk. There's no need to create additional partitions with the parted or fdisk commands since GRUB 2 now supports reading ZFS-formatted disks and booting from them just fine.

Creation

Here's the command to create the storage pool:

root #zpool create -f -o ashift=12 -o cachefile=/tmp/zpool.cache -O normalization=formD -O compression=lz4 -m none -R /mnt/gentoo rpool /dev/disk/by-id/ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823What just happened? Let's try to break down the command:

zpool create: create a new pool.-f: force the creation of the pool.-o ashift=12: the selected value for the alignment shift, 12 in this case, which corresponds to 2^12 Bytes or 4 KiB. This value be set once at the pool creation. You can find out more about the alignment shift here.-o cachefile=/tmp/zpool.cache: create a pool cache file in/tmp. This file is extremely important and MUST be copied over in the chroot directory. We will get back to it later.-O normalization=formD: the selected normalisation algorithm to use when comparing two Unicode file names on the file system,formDin this case. I found this pretty interesting link on the unicode.org website that explains at length the gory details of the different Unicode normalisation forms.-O compression=lz4: the selected compression algorithm to use when writing files to disk,lz4in this case. This link explains the different compression algorithms built in ZFS much better than I could.-m none: do not set a mountpoint for this storage pool.-R /mnt/gentoo: the alternate root directory, which is actually just a temporary mount point for the installation.rpool: the name of this storage pool. You can customise this value if you want but we will userpoolthroughout this guide./dev/disk/by-id/ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823: the path to the physical disk, also known as aVDEVin ZFS lingo. It must point to the actual disk you want to format. Remember to use by-id for the disk.

All in all, zpool create is the equivalent of the gool old mkfs. I encourage you to read two links from the Oracle website here and here. They go over what a storage pool is about and the wide range of scenarios they can be used in.

Verification

Let's check to see whether the pool was created successfully:

root #zpool status pool: rpool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823 ONLINE 0 0 0

errors: No known data errors

Datasets

In ZFS jargon, a dataset is, as its name suggests, a bunch of data. A dataset has several attributes which can be enabled or disabled when creating the dataset. Datasets can be nested, that is, a dataset can be created out of another dataset. When doing so, child datasets will inherit properties from their parents. You can create as many datasets as you wish with ZFS. However, too many datasets will slow down the execution of the zfs command as well as the startup of the system (over 100 according to the FreeBSD ZFS Guide)

In order not to confuse the reader, our file system layout is as simple as it gets:

- a root dataset, unmounted and out of which the two following datasets are created

- the

/dataset dedicated to the system - the

/bootdataset dedicated to the kernel, initramfs and GRUB 2 files

Feel free to customise this layout and create dedicated datasets for directories such as /usr/portage, /var, /var/log, etc. if you deem they each need to live on separate datasets.

Creation

Here are the commands to create the datasets according to the layout described above:

root #zfs create -o mountpoint=none -o canmount=off rpool/ROOT

root #zfs create -o mountpoint=/ rpool/ROOT/gentoo

root #zfs create -o mountpoint=/boot rpool/ROOT/boot

We have to mark the rpool/ROOT/boot dataset as bootable otherwise the system won't boot at all:

root #zpool set bootfs=rpool/ROOT/boot rpool

We are creating a Swap dataset for the system according to the ZFS On Linux official guide:

root #zfs create -V 4G -b $(getconf PAGESIZE) -o logbias=throughput -o sync=always -o primarycache=metadata rpool/SWAP

root #mkswap /dev/zvol/rpool/SWAP

root #swapon /dev/zvol/rpool/SWAP

Have a look at the link above for an explanation of each option.

Verification

Let's check to see whether everything looks good:

root #zfs list -t allNAME USED AVAIL REFER MOUNTPOINT rpool 756K 143G 96K none rpool/ROOT 288K 143G 96K none rpool/ROOT/boot 96K 143G 96K /mnt/gentoo/boot rpool/ROOT/gentoo 96K 143G 96K /mnt/gentoo rpool/SWAP 4G 143G 56K -

root #zpool get bootfs rpoolNAME PROPERTY VALUE SOURCE rpool bootfs rpool/ROOT/boot local

Getting the installation directory ready

Now that our datasets are created and mounted, let's prepare the installation directory. First, fetch the latest stage3 and extract it in the installation directory:

root #cd /mnt/gentoo

root #latest_stage3=$(curl http://distfiles.gentoo.org/releases/amd64/autobuilds/latest-stage3.txt 2>/dev/null | awk '$0 !~ /^#/ { print $1; exit; };')

root #tar xvf *.tar.* -C ./

root #mount -t proc none proc

root #mount --rbind /sys sys

root #mount --rbind /dev dev

Replace latest-stage3.txt with latest-stage3-amd64-systemd.txt in the above

curl command for systemd setup.Remember the /tmp/zpool.cache file I told you about? We are going to copy it over in the installation directory:

root #mkdir -p /mnt/gentoo/etc/zfs

root #cp /tmp/zpool.cache /mnt/gentoo/etc/zfs/zpool.cache

Let's not forget the resolv.conf file to get out on the Interwebz from within the chroot:

root #cp /etc/resolv.conf /mnt/gentoo/etc/

It's time to chroot into the installation directory:

root #chroot /mnt/gentoo /bin/bash

root #. /etc/profile

root #export PS1="(zfschroot) $PS1"

root #cd

Configuring the system

At this point, the rest of the installation is fairly standard except a few steps that are really ZFS specific. If you're not quite sure about what you're typing, just stop and read the Gentoo Installation handbook.

Let's start off by configuring the system locales:

root #echo 'fr_FR.UTF-8 UTF-8' > /etc/locale.gen

root #locale-gen

root #eselect locale set fr_FR.UTF-8

root #env-update

Set the timezone we are in:

root #ln -sf /usr/share/zoneinfo/Europe/Paris /etc/localtime

Configure the hostname:

root #echo 'hostname="foo"' > /etc/conf.d/hostname

Configure /etc/fstab:

root #cat > /etc/fstab/dev/zvol/rpool/SWAP none swap sw 0 0 ^D

Configure /etc/portage/make.conf:

root #cat >> /etc/portage/make.confMAKEOPTS="-j4" EMERGE_DEFAULT_OPTS="--with-bdeps=y --keep-going=y" LINGUAS="en fr_FR" GRUB_PLATFORMS="efi-64 pc" ^D

Copy Portage repositories configuration file:

root #cp /usr/share/portage/config/repos.conf /etc/portage/repos.conf

Synchronise the tree and read the news:

root #emerge --sync

root #eselect news read

Re-emerge @world, install vi and a DHCP client:

root #emerge -uDNav @world

root #emerge nvi dhcpcd

Unmask ZFS packages

Some of these packages haven't been marked stable yet. We need to add manually the list of packages to the /etc/portage/package.accept_keywords file:

root #cat >> /etc/portage/package.accept_keywordssys-kernel/spl ~amd64 sys-fs/zfs ~amd64 sys-fs/zfs-kmod ~amd64 sys-boot/grub ~amd64 ^D

We must unmask the libzfs USE flag for GRUB 2 and add it to the /etc/portage/package.use directory:

root #echo "sys-boot/grub libzfs" > /etc/portage/package.use/grub

Unmask genkernel and gentoo-sources

For the time being, only kernel versions greater than or equal to 4.13.x are compatible with ZFS. You must also install the latest version of genkernel to embed ZFS in your kernel:

root #cat >> /etc/portage/package.accept_keywords>=sys-kernel/genkernel-3.5.2.4 ~amd64 >=sys-kernel/gentoo-sources-4.13.0 ~amd64 >=app-misc/pax-utils-1.2.2-r2 ~amd64 ^D

genkernel needs util-linux to be compiled with the static-libs USE flag:

root #echo ">=sys-apps/util-linux-2.30.2 static-libs" > /etc/portage/package.use/util-linux

Let's emerge genkernel, gentoo-sources and gptfdisk:

root #emerge sys-kernel/genkernel sys-kernel/gentoo-sources sys-apps/gptfdisk

At this point, you are all set to compile ZFS in your kernel. Generate a generic kernel config file:

root #cd /usr/src/linux

root #make menuconfig

Exit and save.

Compile the kernel with genkernel

Take a deep breath and type this in:

root #genkernel --makeopts=-j4 --zfs all || (emerge grub:2 && genkernel --makeopts=-j4 --zfs all)

What's going on here? I must admit it is a convoluted way to compile the kernel as well everything needed to have a working system with ZFS support enabled. But hey, it works!

GRUB 2 compilation, with the libzfs USE flag turned on, ultimately fails during configure on the first try (you can try on your own before launching this command). Indeed, the configure script scouts for a whole lot of ZFS include files in the /usr/src/linux directory. Unless we compile the kernel at least once before, these files won't be present on the system. We need to generate them in some fashion with genkernel. However, at the end of the compilation process, genkernel calls ZFS commands, which are not installed on the system yet, and fails. We catch this failure with the || shell command. In the second part of the command, we emerge GRUB 2 and launch again the genkernel command. This time around, GRUB 2 will install just fine. Note that GRUB 2 with the libzfs USE flag installs the required ZFS dependencies automatically: sys-fs/zfs, sys-fs/zfs-kmod, and sys-kernel/spl.

Note that the kernel symbols seem to be related to a particular

.config selection - if you modify your kernel config you need to rebuild sys-fs/zfs, sys-fs/zfs-kmod, and sys-kernel/spl after the kernel compile finishes, and then rebuild the initramfs.

root #genkernel --zfs --menuconfig all

root #emerge --ask sys-fs/zfs sys-fs/zfs-kmod sys-kernel/spl

root #genkernel initramfs

root #grub-mkconfig -o /boot/grub/grub.cfg

modprobe zfs during boot.It is highly recommended to save a working kernel, initramfs, and System.map when recompiling the kernel. Simply copy the working files in

/boot and add .working at the end:

root #pushd /boot; for a in *`uname -r` ; do cp $a $a.workinz ; done ; popd

root #grub-mkconfig -o /boot/grub/grub.cfg.working kernel boots correctly. Then you can run genkernel all you want and you'll always have a working backup that it won't overwrite.Install GRUB 2

We've got to install the GRUB 2 bootloader in order to boot our system. Let's get GRUB to probe the /boot partition first:

root #grub-probe /bootzfs

Careful,

grub-probe MUST return zfs! If it doesn't, something is wrong with your setup. Start over and make sure to follow every step of the guide. Do not attempt to ignore this step and carry on, you will end up with a broken system.When zpool created our storage pool, it created partitions under a GPT scheme. In order to boot Gentoo Linux on a GPT partition under legacy boot (BIOS), GRUB 2 requires a BIOS boot partition. By design, ZFS left a very small unpartitioned space at the beginning of the disk. We will use the sgdisk utility, which is part of sys-apps/gptfdisk, to format this free space into a BIOS boot partition:

root #sgdisk -a1 -n2:48:2047 -t2:EF02 -c2:"BIOS boot partition" /dev/disk/by-id/ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823

Let's now run partx to refresh the list of partitions recognised by the kernel for the current disk:

root #partx -u /dev/disk/by-id/ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823

We've got to let GRUB 2 know that our kernel lives in a ZFS partition. Edit the file /etc/default/grub and add the following two lines:

root #vi /etc/default/grub... GRUB_DISTRIBUTOR="Gentoo Linux" GRUB_CMDLINE_LINUX="dozfs real_root=ZFS=rpool/ROOT/gentoo" ...

Go ahead and install the bootloader:

root #grub-install /dev/disk/by-id/ata-WDC_WD1600AAJS-75M0A0_WD-WMAV39764823Installing for i386-pc platform. Installation finished. No error reported.

Let's check whether the ZFS module for GRUB has been installed:

root #ls /boot/grub/*/zfs.mod/boot/grub/i386-pc/zfs.mod

Finally, we've got to generate the grub.cfg file in the /boot/grub directory so that the boot loader can boot our freshly generated kernel:

root ## grub-mkconfig -o /boot/grub/grub.cfgGenerating grub configuration file ... Found linux image: /boot/kernel-genkernel-x86_64-4.14.10-gentoo Found initrd image: /boot/initramfs-genkernel-x86_64-4.14.10-gentoo done

Services

The following services must be enabled for ZFS to properly start and run:

root #rc-update add zfs-import boot

root #rc-update add zfs-mount boot

root #rc-update add zfs-share default

root #rc-update add zfs-zed default

You need to adopt the commands to systemd if you previously selected the systemd stage3.

User management

Change the root password:

root #passwd

Add a new user and change his password:

root #useradd -m -s /bin/bash -G wheel,portage foo

root #passwd foo

Wrapping it up

Let's exit, unmount everything and reboot into our new system:

root #exit

root #umount -lR {dev,proc,sys}

root #cd

root #swapoff /dev/zvol/rpool/SWAP

root #zpool export rpool

root #reboot